Scraper

Securties List is downloaded from HKEX

import requests

from bs4 import BeautifulSoup

import re

import numpy as np

import pandas as pd

import pdfplumber

import os

from tqdm import tqdm

file_errors_location = 'ListOfSecurities_c.xlsx'

df = pd.read_excel(file_errors_location,skiprows=2)

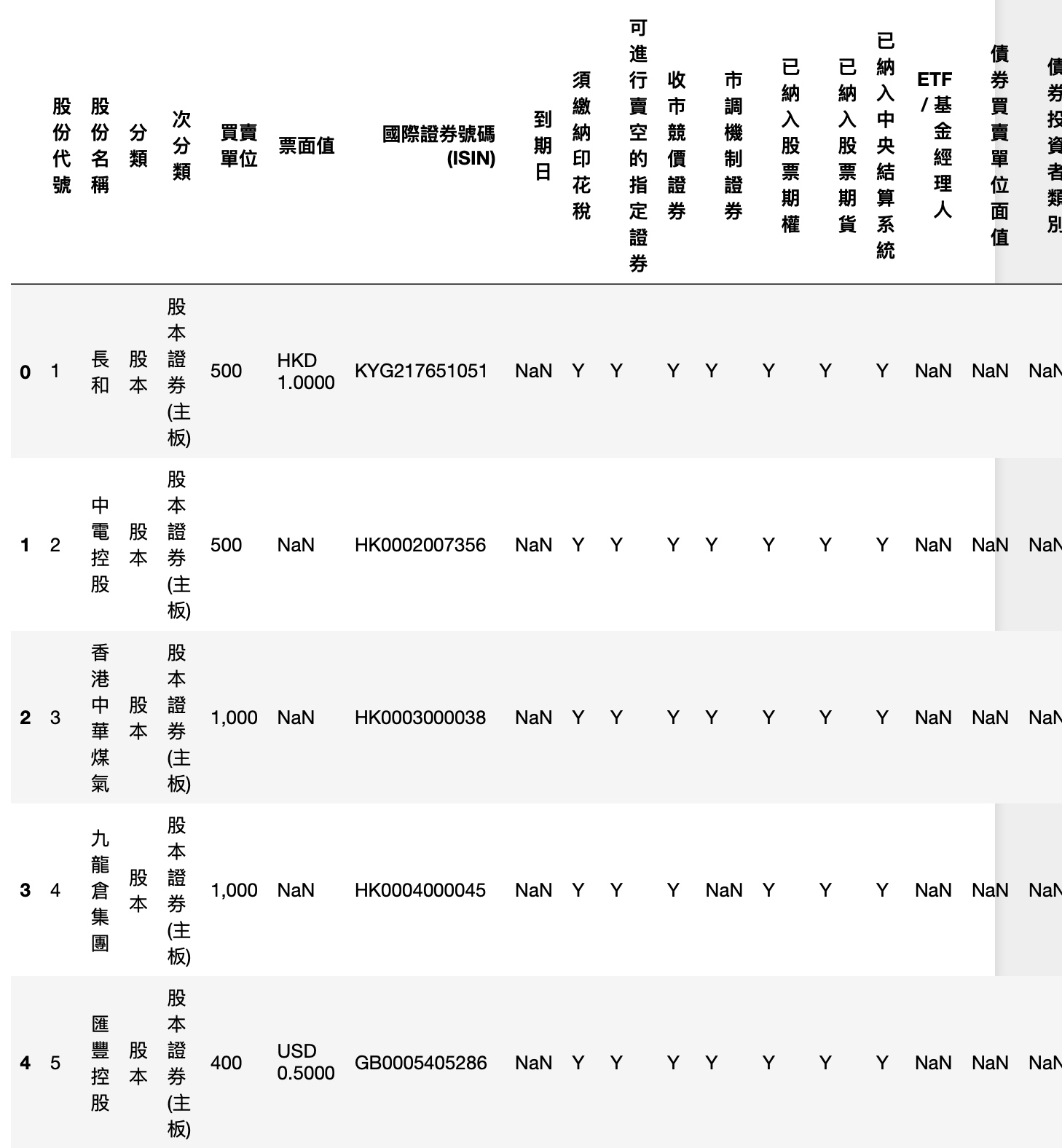

df.head(10)

outfile = df[(df[u'分類']=='股本')&(df[u'次分類']!='非上市可交易證券')]

outfile.head(5)

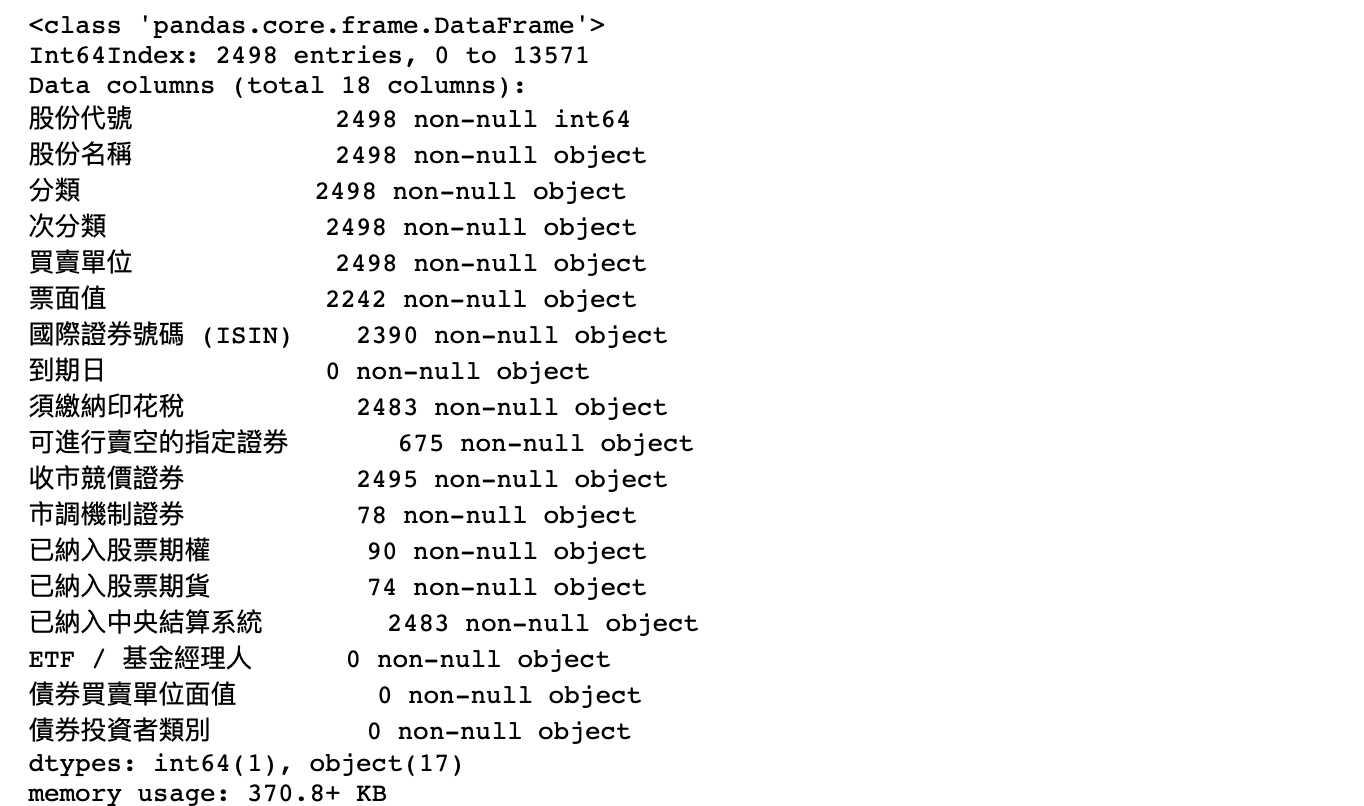

outfile.info()

outfile.to_csv('output_file.csv')

# codeList = list(outfile[u'股份代號'])

# print(codeList)

stock_dict = {}

for row in outfile.itertuples():

stock_dict[row.股份代號] = row.股份名稱

print(len(stock_dict)) # 2498

HTML parser

def parse_html(url):

try:

r = requests.get(url)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text

except Exception as e:

print('Failed:'+str(e))

Locate to annual report

BASE_URL = 'https://webb-site.com'

def get_pdf_link(code):

demo = parse_html('https://webb-site.com/dbpub/orgdata.asp?code='+str(code)+'&Submit=current')

soup = BeautifulSoup(demo,'html.parser')

temp = soup.find_all('li')

for i in temp:

if i.a and i.a.string =='Financials':

res = i.a.attrs['href']

break

# print(res)

docpage = parse_html(BASE_URL+res)

soup4doc = BeautifulSoup(docpage,'html.parser')

docList = soup4doc.find_all('td',attrs={'style':'text-align:left'})

# print(len(docList))

for i in docList:

if i.a:

pdf_url = i.a.attrs['href']

break

print(pdf_url)

return pdf_url

# get_pdf_link(700)PDF2txt

def read_parse_pdf(input_path):

st = ''

pdf = pdfplumber.open(input_path)

for t in tqdm(pdf.pages):

p_crop = t.within_bbox((0+35, 0+35, t.width-43, t.height-35))

if p_crop.extract_text():

st += p_crop.extract_text()

return st

# read_parse_pdf('./pdf/1_長和.pdf')Operation script

errorList = []

for key,value in stock_dict.items():

print(key,':',value)

if not os.path.exists('./txt/'+str(key)+'_'+value+'.txt'):

link = get_pdf_link(key)

pdf_file = requests.get(link)

# save pdf file

with open('./pdf/'+str(key)+'_'+value+'.pdf', 'wb') as f:

f.write(pdf_file.content)

try:

content = read_parse_pdf('./pdf/'+str(key)+'_'+value+'.pdf')

# save converted text

with open('./txt/'+str(key)+'_'+value+'.txt','a') as fw:

fw.write(content)

except Exception as e:

print(e)

errorList.append({key:value})

else:

print('./txt/'+value+'_'+str(key)+'.txt already existed')

print("Error list:",errorList)