Google Colab

How to prevent Google Colab from disconnecting ?

Open chrome inspector(Cmd+option+i), copy & paste the below code to the console

function ClickConnect(){

console.log("Working");

document.querySelector("colab-toolbar-button").click()

}setInterval(ClickConnect,88888)Below one - not working

function ClickConnect(){

console.log("Working");

document.querySelector("colab-toolbar-button#connect").click()

}

setInterval(ClickConnect,60000)Upgrade memory on Google Colab FOR FREE

Free upgrade from the current 12GB to 25GB. After connecting to a runtime, just type the following snippet:

a = []

while(1):

a.append('1')After a minute or so, you will get a notification from Colab saying “Your session crashed.”

This will be followed by a screen asking if you would like to switch to a high-RAM runtime.

Click yes, and you will be rewarded with 25GB of RAM

Upload files to colab (From a local drive)

from google.colab import files

uploaded = files.upload()

for fn in uploaded.keys():

print('User uploaded file "{name}" with length {length} bytes'.format(

name=fn, length=len(uploaded[fn])))(From Google Drive)

# 安装 PyDrive 操作库,该操作每个 notebook 只需要执行一次

!pip install -U -q PyDrive

from pydrive.auth import GoogleAuth

from pydrive.drive import GoogleDrive

from google.colab import auth

from oauth2client.client import GoogleCredentials

def login_google_drive():

# 授权登录,仅第一次的时候会鉴权

auth.authenticate_user()

gauth = GoogleAuth()

gauth.credentials = GoogleCredentials.get_application_default()

drive = GoogleDrive(gauth)

return drive列出 GD 下的所有文件

def list_file(drive):

file_list = drive.ListFile({'q': "'root' in parents and trashed=false"}).GetList()

for file1 in file_list:

print('title: %s, id: %s, mimeType: %s' % (file1['title'], file1['id'], file1["mimeType"]))

drive = login_google_drive()

list_file(drive)Download to local drive

from google.colab import files

with open('example.txt', 'w') as f:

f.write('some content')

files.download('example.txt')Saving Trained Models into Google Drive Use the following code to save models in google colab.

Install PyDrive library into Google Colab notebook.

!pip install -U -q PyDrive from pydrive.auth import GoogleAuth from pydrive.drive import GoogleDrive from google.colab import auth from oauth2client.client import GoogleCredentialsAuthenticate and create the PyDrive client

auth.authenticate_user() gauth = GoogleAuth() gauth.credentials = GoogleCredentials.get_application_default() drive = GoogleDrive(gauth)

Save Model or weights on google drive and create on Colab directory in Google Drive

model.save('model.h5') model_file = drive.CreateFile({'title' : 'model.h5'}) model_file.SetContentFile('model.h5') model_file.Upload()Download the model to google drive

# download to google drive drive.CreateFile({'id': model_file.get('id')})

Loading models from Google Drive into Colab

file_obj = drive.CreateFile({'id': '16zbkEN4vqnPvIOvLMF1IaXKYCq5g3Yln'})

file_obj.GetContentFile('keras.h5')How to mount Google Drive

Google Colab runs isolated from Google Drive, so you cannot access it directly. To access it, you need to authenticate, give permissions to Colab so that it can access it and mount the drive. Add the following code to a cell:

from google.colab import drive

drive.mount('/content/gdrive')Notice that our drive is mounted under path /content/gdrive/My Drive. If you do ls you should be able to see your drive contents:

!ls /content/gdrive/My\ Drive/Colab\ NotebooksSave file:

with open('/content/gdrive/My Drive/foo.txt', 'w') as f:

f.write('Hello Google Drive!')

drive.flush_and_unmount()

print('All changes made in this colab session should now be visible in Drive.')How to save your model in Google Drive

To save our model, we just use torch.savemethod:

model_save_name = 'classifier.pt'

path = F"/content/gdrive/My Drive/{model_save_name}"

torch.save(model.state_dict(), path)How to load the model from Google Drive

Make sure you have mounted your Google Drive. Now, we will access our saved model checkpoint from the Google Drive and use it. We know the path and we will use that in torch.load :

model_save_name = 'classifier.pt'

path = F"/content/gdrive/My Drive/{model_save_name}"

model.load_state_dict(torch.load(path))import custom modules in google colab

from google.colab import drive

drive.mount('/content/gdrive', force_remount=True)

!ls /content/gdrive/My\ Drive/*.py

>>> /content/gdrive/My Drive/mylib.py

!cat '/content/gdrive/My Drive/mylib.py'

>>> def MyFunction():

>>> print ('My imported function')

# We'll need to update our path to import from Drive.

import sys

sys.path.append('/content/gdrive/My Drive')

# Now we can import the library and use the function.

import mylib

mylib.MyFunction()run the code from google drive

!python3 "/content/drive/My Drive/app/mnist_cnn.py"Download Dataset (.csv File) to google drive

If you want to download .csv file from url to “app” folder, simply run:

!wget https://raw.githubusercontent.com/vincentarelbundock/Rdatasets/master/csv/datasets/Titanic.csv -P "/content/drive/My Drive/app"Restart Google Colab

!kill -9 -1check GPU ram

# memory footprint support libraries/code

!ln -sf /opt/bin/nvidia-smi /usr/bin/nvidia-smi

!pip install gputil

!pip install psutil

!pip install humanize

import psutil

import humanize

import os

import GPUtil as GPU

GPUs = GPU.getGPUs()

# XXX: only one GPU on Colab and isn’t guaranteed

gpu = GPUs[0]

def printm():

process = psutil.Process(os.getpid())

print("Gen RAM Free: " + humanize.naturalsize(psutil.virtual_memory().available), " | Proc size: " + humanize.naturalsize(process.memory_info().rss))

print("GPU RAM Free: {0:.0f}MB | Used: {1:.0f}MB | Util {2:3.0f}% | Total {3:.0f}MB".format(gpu.memoryFree, gpu.memoryUsed, gpu.memoryUtil*100, gpu.memoryTotal))

printm()Is GPU Working?

import tensorflow as tf

tf.test.gpu_device_name()Which GPU Am I Using?

from tensorflow.python.client import device_lib

device_lib.list_local_devices()What about RAM?

!cat /proc/meminfoWhat about CPU?

!cat /proc/cpuinfoChanging Working Directory

import os

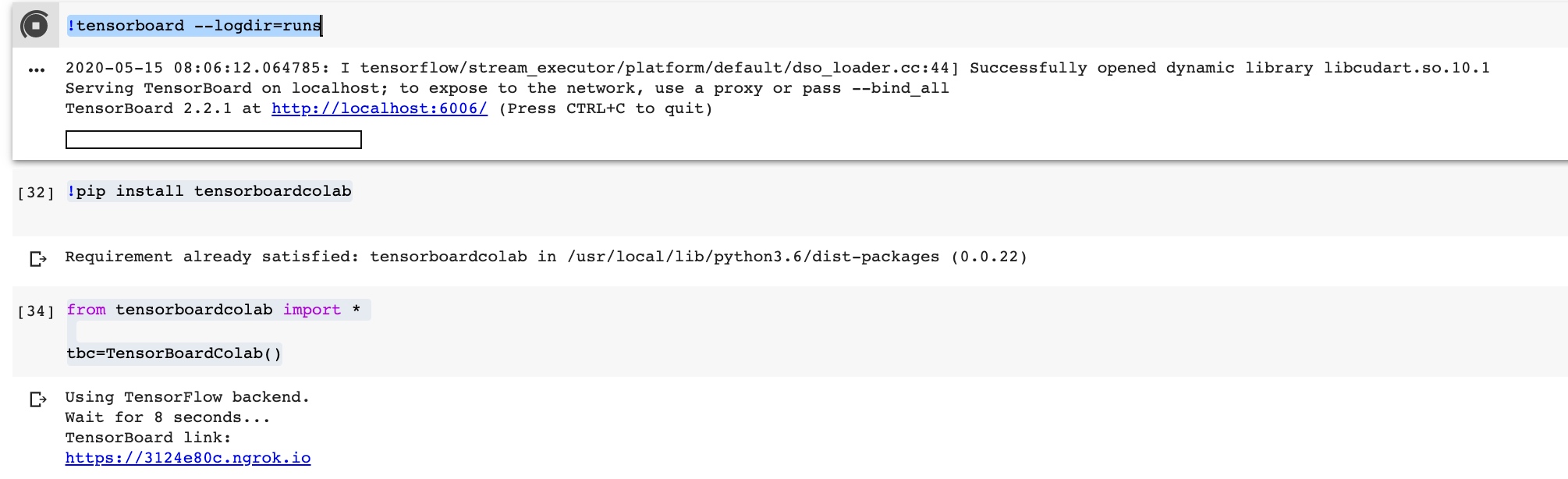

os.chdir("drive/app")Use tensorboard in colab

!pip install tensorboardcolabfrom tensorboardcolab import *

tbc=TensorBoardColab()!tensorboard --logdir=runs # runs is your <log-directory>

Slurm

Slurm is an open source, fault-tolerant, and highly scalable cluster management and job scheduling system for large and small Linux clusters.

The newer part of the cluster contains the following nodes :

- Korenvliet.ewi.utwente.nl (headnode + storage)

- Caserta.ewi.utwente.nl (192 cpu’s, 2TB ram)

- Ctit061..70.ewi.utwente.nl (16 cpu’s 24GB ram)

- Ctit080.ewi.utwente.nl (80 cpu’s, 512GB ram, 2x GPU Tesla P100/16GB)

- Ctit081.ewi.utwente.nl (64 cpu’s, 256GB ram, 2x GPU Titan-X/12GB)

- Ctit082.ewi.utwente.nl (64 cpu’s, 256GB ram, 4x GPU Titan-X/12GB)

- Ctit083.ewi.utwente.nl (64 cpu’s, 256GB ram, 4x GPU 1080ti/11GB)

korenvliet.ewi.utwente.nl

s2462133

srun -N1 --gres=gpu:1 python3 xx.py- https://slurm.schedmd.com/quickstart.html

- https://fmt.ewi.utwente.nl/redmine/projects/ctit_user/wiki

- https://fmt.ewi.utwente.nl/redmine/projects/ctit_user/wiki/Local_Software

- https://fmt.ewi.utwente.nl/redmine/projects/ctit_user/wiki/Slurm_srun

- https://fmt.ewi.utwente.nl/redmine/projects/ctit_user/wiki/HPC

- https://redmine.hpc.rug.nl/redmine/projects/peregrine/wiki/Submitting_a_Python_job_

- https://vsoch.github.io/lessons/sherlock-jobs/