Deployment

Flask Restful API

from flask import Flask, request, jsonify

from flask_cors import CORS

import os

from ast import literal_eval

import pandas as pd

from iasc.predictor_test import generate_BERT_input,predict

app = Flask(__name__)

CORS(app)

# dataset_path = os.environ["dataset_path"]

# reader_path = os.environ["reader_path"]

dataset_path='./iAsc/data/not_expanded_df.csv'

reader_path='./iAsc/models/torch_para_bert_qa_cpu.pth'

df = pd.read_csv(dataset_path, converters={"paragraphs": literal_eval})

@app.route("/api", methods=["GET"])

def api():

query = request.args.get("query")

# from urllib.parse import unquote

# text = unquote(query, 'utf-8')

# print('text: ',text,type(text))

# print('query: ',query,type(query))

input = generate_BERT_input(query, df)

prediction = predict(X=input, reader_path=reader_path)

return jsonify(

query=query, answer=prediction[0], title=prediction[1], paragraph=prediction[2], score=prediction[3]

)

if __name__ == '__main__':

app.run()Dockerfile

docker base image is from: https://github.com/anibali/docker-pytorch

FROM anibali/pytorch:cuda-10.1

WORKDIR /iasc

COPY requirement.txt /iasc

RUN sudo apt-get update

RUN pip install -r requirement.txt

COPY . /iasc

RUN sudo chmod -R a+rwx /iasc/

EXPOSE 80

CMD ["python","app.py"]Steps

Installing Nvidia-Docker/ Docker

To install Nvidia-Docker which use Nvidia GPU, you can take help from this article or official github is also fine: https://github.com/NVIDIA/nvidia-docker

For Ubuntu 16.04/18.04/20.04, Debian. For other version, just check the github link.

distribution=$(. /etc/os-release;echo $ID$VERSION_ID)

curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add -

curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list

sudo apt-get update && sudo apt-get install -y nvidia-container-toolkit

sudo systemctl restart dockerTo install docker without GPU can use below scripts:

Remove the existing version of Docker

- Firstly we need to remove the existing version of docker.

sudo apt-get remove docker docker-engine docker.io- If docker is not installed on your machine, then apt-get will tell you that. It is totally fine.

Installing Docker (ubuntu)

sudo apt-get update sudo apt-get install docker.io

and change permission (use docker without sudo)

detail

To create the docker group and add your user:

Create the docker group.

$ sudo groupadd docker

Add your user to the docker group.

$ sudo usermod -aG docker $USER

Log out and log back in so that your group membership is re-evaluated.

If testing on a virtual machine, it may be necessary to restart the virtual machine for changes to take effect.

On a desktop Linux environment such as X Windows, log out of your session completely and then log back in.

On Linux, you can also run the following command to activate the changes to groups:

$ newgrp docker

Verify that you can run docker commands without sudo.

$ docker run hello-world

This command downloads a test image and runs it in a container. When the container runs, it prints an informational message and exits.

If you initially ran Docker CLI commands using sudo before adding your user to the docker group, you may see the following error, which indicates that your ~/.docker/ directory was created with incorrect permissions due to the sudo commands.

WARNING: Error loading config file: /home/user/.docker/config.json -

stat /home/user/.docker/config.json: permission denied

To fix this problem, either remove the ~/.docker/ directory (it is recreated automatically, but any custom settings are lost), or change its ownership and permissions using the following commands:

$ sudo chown "$USER":"$USER" /home/"$USER"/.docker -R

$ sudo chmod g+rwx "$HOME/.docker" -R

3. Verify the installation

- sudo docker run hello-world

- If you see an output massage ‘Hello from Docker!’, you are all set.

This message shows that your installation appears to be working correctly. To generate this message, Docker took the following steps:

- The Docker client contacted the Docker daemon.

- The Docker daemon pulled the “hello-world” image from the Docker Hub. (amd64)

- The Docker daemon created a new container from that image which runs the executable that produces the output you are currently reading.

- The Docker daemon streamed that output to the Docker client, which sent it to your terminal.

Commands:

传文件

scp -i "edu_st.pem" -r iasc/aws_deployment [email protected]:/home/ubuntuedu_st.pem 和 ec2-3-235-66-241 要替换

sudo docker build -t iasc:latest .

sudo docker run -d -p 5000:5000 iasc

sudo docker ps -a

sudo docker logs cc2e561284de

sudo docker logs -f cc2e561284de # to view log real time

http://ec2-35-174-60-49.compute-1.amazonaws.com:5000/chat?query=hi&stock=0700

注意: 5000是flask default的port, 在docker run的时候可以改成:

sudo docker run -d -p 80:5000 iasc这样就可以不用加port了, http://ec2-3-80-176-101.compute-1.amazonaws.com/chat?query=thanks?&stock=0700

sudo docker login

sudo docker images

sudo docker tag iasc:latest charonnnnn/iasc:latest

sudo docker push charonnnnn/iasc:latest

docker pull charonnnnn/iasc:latestRemember:

To modify the security group to allow HTTP traffic on port 80 of your instance to be accessible by the outside world.

Reference:

- https://atrisaxena.github.io/projects/deploy-deeplearning-model-kubernetes/

- https://medium.com/datadriveninvestor/deploy-your-pytorch-model-to-production-f69460192217

- https://www.paepper.com/blog/posts/pytorch-gpu-inference-with-docker/

- https://www.paepper.com/blog/posts/pytorch-model-in-production-as-a-serverless-rest-api/

- https://towardsdatascience.com/simple-way-to-deploy-machine-learning-models-to-cloud-fd58b771fdcf

- https://devopstar.com/2019/03/21/openai-gpt-2-pytorch-model-over-a-containerised-api

EC2 instance

when create instance need to setup security group:

Set Type HTTP, Protocol TCP, Port range 80, and Source to “0.0.0.0/0”.

Set Type HTTP, Protocol TCP, Port range 80, and Source to “::/0”.

Set Type Custom TCP, Protocol TCP, Port range 8080, and Source to “0.0.0.0/0”.

Set Type SSH, Protocol TCP, Port range 22, and Source to “0.0.0.0/0”.

Set Type HTTPS, Protocol TCP, Port range 443, and Source to “0.0.0.0/0”.Note:

- flask default port is 5000

- http is 80

- https is 443

login:

ssh -i "techsupport210101.pem" [email protected]transfer files

scp -i "techsupport210101.pem" -r iasc/aws_deployment [email protected]:/home/ubuntutechsupport210101 & ec2-3-80-217-66 need to be replaced

run: if use run command:

flask run --host=0.0.0.0 --port=80will have permission denied error for port 80

so the workaround:

if __name__ == "__main__":

app.run(host="0.0.0.0", port=80)and run

sudo python app.py

Connect AWS instance jupyer notebook

set up a password

jupyter notebook passwordcreate a self-sign SSL cert

cd ~

mkdir ssl

cd ssl

openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout mykey.key -out mycert.pemstart server

jupyter notebook --certfile=~/ssl/mycert.pem --keyfile ~/ssl/mykey.keyIn my Macbook:

ssh -i "edu_st.pem" -N -f -L 8888:localhost:8888 [email protected]Then open browser https://localhost:8888

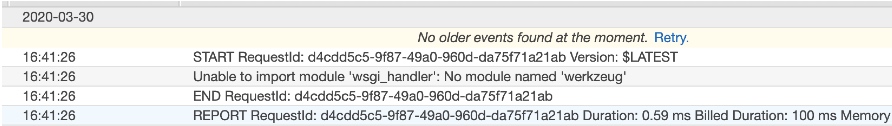

Serverless

Serverless + lambda + flask + dynamodb

https://www.serverless.com/framework/docs/providers/aws/guide/serverless.yml/

Converting an existing Flask application

If you already have an existing Flask application, it’s very easy to convert to a Serverless-friendly application. Do the following steps:

- Prepare requirements.txt and flask app.py

Configure your serverless.yml:

You should have a serverless.yml that looks like the following:

# serverless.yml service: serverless-flask plugins: - serverless-python-requirements - serverless-wsgi custom: wsgi: app: app.app packRequirements: false pythonRequirements: dockerizePip: non-linux provider: name: aws runtime: python3.6 stage: dev region: us-east-1 functions: app: handler: wsgi_handler.handler events: - http: ANY / - http: 'ANY {proxy+}'

- Make sure that the value for app under the custom.wsgi block is configured for your application. It should be

, where module is the name of the Python file with your Flask instance and instance is the name of the variable with your Flask application.

Install the serverless-wsgi and serverless-python-requirements packages

sls plugin install -n serverless-wsgi sls plugin install -n serverless-python-requirements

Deploy your function with :

sls deploy

Note: if you use other resources (databases, credentials, etc.), you’ll need to make sure those make it into your application. Check out our other material on managing secrets & API keys with Serverless.https://serverless.com/blog/serverless-secrets-api-keys/

Before deploy, need to run the docker daemon

docker-machine start default

docker-machine env

eval $(docker-machine env)deploy:

sls deploytest local:

sls wsgi serveserverless.yml

service: iasc-saltedge

plugins:

- serverless-wsgi

- serverless-python-requirements

custom:

wsgi:

app: api.app

packRequirements: false

pythonRequirements:

dockerizePip: true

package:

exclude:

- node_modules/**

- venv/**

provider:

name: aws

runtime: python3.7

stage: dev

region: us-east-1

iamRoleStatements: # 需要, 不然任何操作都会被拒绝

- Effect: Allow

Action:

- dynamodb:Query

- dynamodb:Scan

- dynamodb:GetItem

- dynamodb:PutItem

- dynamodb:UpdateItem

- dynamodb:DeleteItem

Resource: "*"

# Resource:

# - { "Fn::GetAtt": ["Arn" ] }

# environment:

# USERS_TABLE: ${self:custom.tableName}

functions:

app:

handler: wsgi_handler.handler

events:

- http: ANY /

- http: 'ANY {proxy+}'

### Below for create table in DynamoDB (不需要, for auto create table)

#resources:

# Resources:

# UsersDynamoDBTable:

# Type: 'AWS::DynamoDB::Table'

# Properties:

# AttributeDefinitions:

# - AttributeName: psid

# AttributeType: S

# KeySchema:

# - AttributeName: psid

# KeyType: HASH

## ProvisionedThroughput:

## ReadCapacityUnits: 3

## WriteCapacityUnits: 3

# BillingMode: PAY_PER_REQUEST

# TableName: 'users'

# ConnectionsDynamoDBTable:

# Type: 'AWS::DynamoDB::Table'

# Properties:

# AttributeDefinitions:

# - AttributeName: connection_id

# AttributeType: S

# KeySchema:

# - AttributeName: connection_id

# KeyType: HASH

## ProvisionedThroughput:

## ReadCapacityUnits: 2

## WriteCapacityUnits: 2

# BillingMode: PAY_PER_REQUEST

# TableName: 'connections'

# AccountsDynamoDBTable:

# Type: 'AWS::DynamoDB::Table'

# Properties:

# AttributeDefinitions:

# - AttributeName: account_id

# AttributeType: S

# KeySchema:

# - AttributeName: account_id

# KeyType: HASH

## ProvisionedThroughput:

## ReadCapacityUnits: 2

## WriteCapacityUnits: 2

# BillingMode: PAY_PER_REQUEST

# TableName: 'accounts'

# TransactionsDynamoDBTable:

# Type: 'AWS::DynamoDB::Table'

# Properties:

# AttributeDefinitions:

# - AttributeName: transaction_id

# AttributeType: S

# KeySchema:

# - AttributeName: transaction_id

# KeyType: HASH

## ProvisionedThroughput:

## ReadCapacityUnits: 3

## WriteCapacityUnits: 3

# BillingMode: PAY_PER_REQUEST

# TableName: 'transactions'Creating two dynamoDB tables in serverless.yml:

一定要装:

sls plugin install -n serverless-wsgi

sls plugin install -n serverless-python-requirements一个配置sls wsgi, 另一个是for sls 安装python dependency in requirements

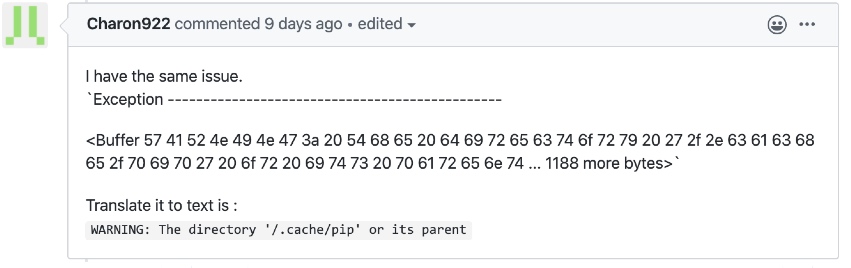

Issue:https://github.com/serverless/serverless/issues/7084

解决办法就是把serverless.yml里的serverless-python-requirements 注释掉就可以 然后就引出另一个issue:

module没有安装, 因为serverless-python-requirements 被注释掉 所以只要装了一开始两条就可以了 浪费了我一堆时间去查(两次)

查看logs:

sls logs -f appRef

- https://medium.com/@Twistacz/flask-serverless-api-in-aws-lambda-the-easy-way-a445a8805028

- https://serverless.com/blog/flask-python-rest-api-serverless-lambda-dynamodb/

Dealing with Lambda’s size limitations

To help deal with potentially large dependencies (for example: numpy, scipy and scikit-learn) there is support for compressing the libraries. This does require a minor change to your code to decompress them. To enable this add the following to your serverless.yml:

custom:

pythonRequirements:

zip: trueand add this to your handler module before any code that imports your deps:

try:

import unzip_requirements

except ImportError:

passSlim Package

Works on non ‘win32’ environments: Docker, WSL are included To remove the tests, information and caches from the installed packages, enable the slim option. This will: strip the .so files, remove pycache and dist-info directories as well as .pyc and .pyo files.

custom:

pythonRequirements:

slim: trueCustom Removal Patterns

To specify additional directories to remove from the installed packages, define a list of patterns in the serverless config using the slimPatterns option and glob syntax. These paterns will be added to the default ones (*/.py[c|o], */pycache, */.dist-info*). Note, the glob syntax matches against whole paths, so to match a file in any directory, start your pattern with **/.

custom:

pythonRequirements:

slim: true

slimPatterns:

- '**/*.egg-info*'To overwrite the default patterns set the option slimPatternsAppendDefaults to false (true by default).

custom:

pythonRequirements:

slim: true

slimPatternsAppendDefaults: false

slimPatterns:

- '**/*.egg-info*'This will remove all folders within the installed requirements that match the names in slimPatterns Option not to strip binaries In some cases, stripping binaries leads to problems like “ELF load command address/offset not properly aligned”, even when done in the Docker environment. You can still slim down the package without *.so files with

custom:

pythonRequirements:

slim: true

strip: falseLambda Layer

Another method for dealing with large dependencies is to put them into a Lambda Layer. Simply add the layer option to the configuration.

custom:

pythonRequirements:

layer: trueThe requirements will be zipped up and a layer will be created automatically. Now just add the reference to the functions that will use the layer.

functions:

hello:

handler: handler.hello

layers:

- { Ref: PythonRequirementsLambdaLayer }If the layer requires additional or custom configuration, add them onto the layer option.

custom:

pythonRequirements:

layer:

name: ${self:provider.stage}-layerName

description: Python requirements lambda layer

compatibleRuntimes:

- python3.7

licenseInfo: GPLv3

allowedAccounts:

- '*'Omitting Packages

You can omit a package from deployment with the noDeploy option. Note that dependencies of omitted packages must explicitly be omitted too. This example makes it instead omit pytest:

custom:

pythonRequirements:

noDeploy:

- pytest